Ever since I started playing strategy and role playing video games, I've been exposed to the concept of the fog of war. A fog of war, in video game speak, essentially simulates the unknown element of the map that you are in. This feature forces you to adjust your strategy in order to finish the objectives that you have based on that map.

Typically, as you go through the map, information about the area that you are in is progressively revealed. It is also good to note that this information about the area is temporary. In a lot of newer games, when you leave an area, the fog of war comes back and your knowledge about that area becomes history. Just like testing, in order for you to achieve the objective in any of these games, information is key. You might have a set of documented requirements that may or may not be up to date and it's still up to you to confirm if the information you have at hand is still relevant, or not.

What is information and why is it important? In Information theory, information refers to the reduction of uncertainty. The point is, when it comes to testing, the more we know, the better we are. Come to think of it, a lot of the existing testing techniques that's out there is about finding information. A lot of times, a tester's role ends up being solely focused on confirming known information. I'm not saying that confirming things is wrong. But if that's all you do, then it becomes wrong. Think of the fog of war. Most software development projects nowadays are service oriented. These projects rely on too much external dependencies that there are essentially an infinite amount of possible sources of failure. These dependencies make your fog of war shift progressively and a whole lot faster. Service dependencies are one thing, people also contributes to that risk. W. Edwards Deming has aptly said in his book, Out of Crisis (page 11) that "Defects are not free, somebody makes them and gets paid for making them.

How did this understanding of the fog of war change my mindset about testing? Simply said, things are not always what they seem. What we know about something yesterday, might not be the same two days from now. In order for us to continuously testing, we need to learn continuously as well. We are now at a juncture where Expert Testing is no longer defined by how long your tenure has been as a tester, or how many certifications you have, nor how good your test plan is.

Most of the readers of this blog has driven a car, right? How do you know that a car is not moving? Through the instrumentation panel? Through a frame of reference, e.g. you look at the trees or a stationary object around you and know that if they are not moving, then you must not be moving too? I wish it was that easy when it comes to software. Because of the so many associated risks, we no longer have an absolute frame of reference. Going back to my car example, in this case, your instrumentation is unreliable and trees or other stationary objects are moving independently.

This frame of reference is usually referred to as an oracle. If you have taken a BBST class or have been hanging around context driven testers long enough, you'll know that there is no such thing as a true oracle. This is why we rely on heuristics in order to create our own frames of references that essentially applies to your given context that is bounded by time.

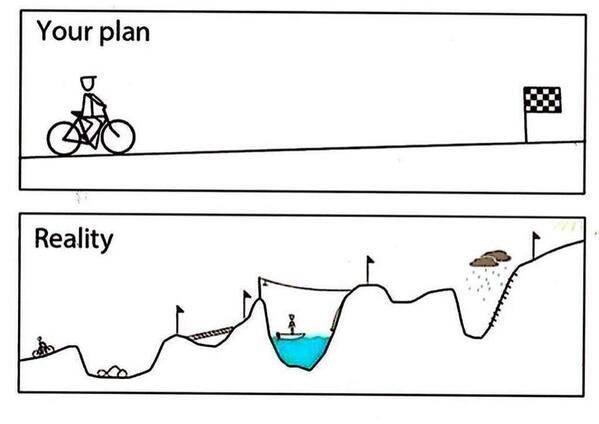

The other effect from the fog of war that I've realized is that plans are only as good as your next update. Test Plans are not useless, as long as they don't remain stale. I have enough personal evidence that as you go through any testing cycle, simultaneous test design and test execution is more effective than any pre-planning that's done. Tests are more relevant and up-to-date. One of the challenges in this approach is results documentation. Primarily because testers, who are human, tend to be lazy. But this risk should be mitigated by session based test management.

I would like to encourage you to share what your experiences are with regards to the testing Fog of War. What have you done to mitigate this risk? Aside from Exploratory Testing, what have you done to alleviate this situation?

|

| Fog of War from Sid Meiers' Civilization |

What is information and why is it important? In Information theory, information refers to the reduction of uncertainty. The point is, when it comes to testing, the more we know, the better we are. Come to think of it, a lot of the existing testing techniques that's out there is about finding information. A lot of times, a tester's role ends up being solely focused on confirming known information. I'm not saying that confirming things is wrong. But if that's all you do, then it becomes wrong. Think of the fog of war. Most software development projects nowadays are service oriented. These projects rely on too much external dependencies that there are essentially an infinite amount of possible sources of failure. These dependencies make your fog of war shift progressively and a whole lot faster. Service dependencies are one thing, people also contributes to that risk. W. Edwards Deming has aptly said in his book, Out of Crisis (page 11) that "Defects are not free, somebody makes them and gets paid for making them.

How did this understanding of the fog of war change my mindset about testing? Simply said, things are not always what they seem. What we know about something yesterday, might not be the same two days from now. In order for us to continuously testing, we need to learn continuously as well. We are now at a juncture where Expert Testing is no longer defined by how long your tenure has been as a tester, or how many certifications you have, nor how good your test plan is.

Most of the readers of this blog has driven a car, right? How do you know that a car is not moving? Through the instrumentation panel? Through a frame of reference, e.g. you look at the trees or a stationary object around you and know that if they are not moving, then you must not be moving too? I wish it was that easy when it comes to software. Because of the so many associated risks, we no longer have an absolute frame of reference. Going back to my car example, in this case, your instrumentation is unreliable and trees or other stationary objects are moving independently.

This frame of reference is usually referred to as an oracle. If you have taken a BBST class or have been hanging around context driven testers long enough, you'll know that there is no such thing as a true oracle. This is why we rely on heuristics in order to create our own frames of references that essentially applies to your given context that is bounded by time.

I would like to encourage you to share what your experiences are with regards to the testing Fog of War. What have you done to mitigate this risk? Aside from Exploratory Testing, what have you done to alleviate this situation?

"Empirical explorations ultimately change our understanding of which questions are important and fruitful and which are not." - Laurence Krauss

No comments:

Post a Comment